Lately, there’s been an article that keeps crossing my field of vision; it’s done so about three or four times in the last week, likely because it was written about fathers and father’s day has just come and gone. The article is titled, “The Myth of the Absent Black Father“. In it, Tara Culp-Ressler suggests that “hands-on parenting is similar among dads of all races”, and, I think, that the idea that any racial differences in the parenting realm might exist is driven by inaccurate racist stereotypes instead of accurate perceptions of reality. There are two points I want to make, one of which is specific to the article itself, and the other of which is about stereotypes and biases as they are spoken about more generally. So let’s start with the myth-busting about parenting across races.

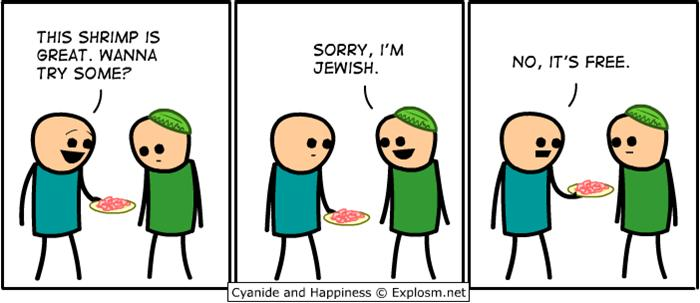

Network TV wasn’t touching this one with a 10-foot pole

The first point I want to make about the article in question is that the title is badly at odds with the data being reported on. The title – The Myth of the Absent Black Father – would seem to strongly suggest that the myth here is that black fathers tend to be absent when it comes to childcare (presumably with respect to other racial groups, rather than in some absolute sense of the word). Now if one wished to label this a myth, they should, presumably, examine the data of the percentage of families with father-present and father-absent homes to demonstrate that rates of absent fathers do not differ substantially by race. What it means to be “present” or “absent” is, of course, a semantic issue that is likely to garner some disagreement. In the interests of maintaining something resembling a precise definition, then, let’s consider matters over which there is likely to be less disagreement, such as, “across different races, are the fathers equally likely to be married to the mother of their children?” or, “does the father live in the same household as their child?”.

There exists plenty of data that speaks to those questions. The answer from the data to both is a clear “no; fathers are not equally likely to be living with the mother across races”. According to census data from 2009, for instance, black children were residing in single-mother homes in around 50% of cases, compared to 18% of white children, 8% in Asian children, and 25% in Hispanic children. With respect to births outside of marriage, further data from 2011 found:

…72 percent of all births to black women, 66 percent to American Indian or Alaskan native women, and 53 percent to Hispanic women occurred outside of marriage, compared with 29 percent for white women, and 17 percent for Asian or Pacific Islander women.

In these two cases, then, it seems abundantly clear that, at least relatively speaking, the “myth” of absent black fathers is not a myth at all; it’s a statistical reality (just like how last time I was discussing “myths” about sex differences, most of the “myths” turned out to be true). This would make the title of the article seem more than a little misleading. If the “myth” of the absent black father isn’t referring to whether the father is actually present in the home or not, then what is the article focused on?

The article itself focuses on a report by the CDC which found that, when they are present, fathers tend to report being about equally involved in childcare over the last month, regardless of their race; similar findings emerge for fathers who are absent. In other words, an absent father is an absent father, regardless of race, just as a present father is a present father, regardless of race. There were some slight differences between racial groups, sure; but nothing terribly noteworthy. That said, if one is concerned with the myth of the absent black father, comparing how much work fathers do given they are present or absent across races seems to miss the mark. Yes; present fathers tend to do more work than absent ones, but the absent ones are disproportionately represented in some groups. That point doesn’t seem to be contested by Tara; instead, she opts to suggest that the reasons that many black fathers don’t live with their children come down to social and economic inequalities. Now that explanation may well be true; it may well not be the whole picture, either. The reason(s) this difference exists is likely complicated, as many things related to human social life are. However, even fully explaining the reasons for a discrepancy does not make the discrepancy stop existing, nor does it make it a myth.

But never mind that; your ax won’t grind itself

So the content of the article is a bit of a non-sequitur from the title. The combination of the title and content seemed a bit like me trying to say it’s a myth that it’s cloudy outside because it’s not raining; though the two might be related, they’re not the same thing (and it very clearly is cloudy, in any case…). This brings me to the second, more general point I wanted to discuss: articles like these are common enough to be mundane. It doesn’t take much searching to find people writing about how (typically other) people (who the author disagrees with or dislikes) tend to hold to incorrect stereotypes or have fallen prey to cognitive biases. As Steven Pinker once said, a healthy portion of social psychological research often focuses on:

… endless demonstrations that People are Really Bad at X, which are then “explained” by an ever-lengthening list of Biases, Fallacies, Illusions, Neglects, Blindnesses, and Fundamental Errors, each of which restates the finding that people are really bad at X.

Reading over a lot of the standard psychological literature, one might get the sense that people aren’t terribly good at being right about the world. In fact, one might even get the impression that our brains were designed to be wrong about a number of socially-important things (like how smart, trustworthy, or productive some people are which might, in turn, affect our decisions about whether they would make good friends or romantic partners). If that were the case, it should pose us with a rather interesting biological mystery.

That’s not to say that being wrong per se is much of a mystery – as we lack perfect information and perfect information processing mechanisms – but rather that it would be strange if people’s brains were designed for such an outcome: if people’s minds were designed to make use of stereotypes as a source of information for decision making, and if those stereotypes are inaccurate, then people should be expected to make worse decisions relative to if they had not used that stereotype as information in the first place (and, importantly, that being wrong tends to carry fitness-relevant consequences). That people continue to make use of these stereotypes (regardless of their race or sex) would require an explanation. Now the most obvious reason for the usage of stereotypes would be, as per the example above, that they are not actually wrong. Before wondering why people use bad information to make decisions, it would serve us well to make sure that the information is, well, actually bad (again, not just imperfect, but actually incorrect).

“Bad information! Very bad!”

Unfortunately, as far as I’ve seen, proportionately-few projects on topics like biases and stereotypes begin by testing for accuracy. Instead, they seem to begin with their conclusion (which is generally, “people are wrong about quite a number of things related to gender and/or race, and no meaningful differences could possibly exist between these groups, so any differential treatment of said groups must be baseless”) and then go out in search of the confirmatory evidence. That’s not to say that all stereotypes will necessarily be true, of course; just that figuring out if that’s the case ought to be step one (step two might then involve trying to understand any differences that do emerge in some meaningful way, with the aforementioned knowledge that explaining these differences doesn’t make them disappear). Skipping that first step leads to labeling facts as “myths” or “racist stereotypes”, and that doesn’t get us anywhere we should want to be (though it can get one pretty good publicity, apparently).