I’m bored is a useless thing to say. I mean, you live in a great, big, vast world that you’ve seen none percent of. Even the inside of your own mind is endless; it goes on forever, inwardly, do you understand? The fact that you’re alive is amazing, so you don’t get to say ‘I’m bored’”. – Louis CK

One of the most vivid – and strangely so – memories of my childhood involved stand-up comedy. I used to always have the TV on in the background of my room when I was younger, usually tuned to comedy central or cartoons. Young me would somewhat-passively absorb all that comedic material and then regurgitate it around people I knew without context; a strategy that made me seem a bit weirder than I already was as a child. The joke in particular that I remember so vividly came from Jim Gaffigan: in it, he expressed surprise that the male seahorses are the one’s who give birth, suggesting that they should just call the one that gives birth the female; he also postulates that the reason this wasn’t the case was that a stubborn scientist had made a mistake. One reason this joke stood out to me in particular was that, as my education progressed, it served as perhaps the best example of how people who don’t know much about the subject they’re discussing can be surprised at some ostensible quirks about it that actually make a great deal of sense to more knowledgeable individuals.

In fact, many things about the world are quite shocking to them.

In the case of seahorses, the mistake Jim was making is that biological sex in that species, as well as many others, can be defined by which sex produces the larger gametes (eggs vs. sperm). In seahorses, the females produce the eggs, but the males provide much of the parental investment, carrying the fertilized eggs in a pouch until they hatch. In such species where the burden of parental care is shouldered more heavily by the males, we also tend to see reversals of mating preferences, where the males tend to become more selective about sexual partners, relative to the females. So not only was the labeling of the sexes no mistake, but there are lots of neat insights to be drawn about psychology from that knowledge. Admittedly, this knowledge does also ruin the joke, but here at Popsych we take the utmost care to favor being a buzzkill over being inaccurate (because we have integrity and very few friends). In the interests of continuing that proud tradition, I would like to explain why the second part of the initial Louis CK joke – the part about us not being allowed to be bored because we ought to just be able to think ourselves to entertainment and pleasure – is, at best, misguided.

That part of the joke contains an intuition shared by more than Louis, of course. In the somewhat-recent past, an article was making its way around the popular psychological press about how surprising it was that people tended to find sitting with their own thoughts rather unpleasant. The paper, by Wilson et al (2014), contains 11 studies. Given that number, along with the general repetitiveness of the designs and lack of details presented in the paper itself, I’ll run through them in the briefest form possible before getting to meat of the discussion. The first six studies involved around 400 undergrads being brought into a “sparsely-furnished room” after having given up any forms of entertainment they were carrying, like their cell phones and writing implements. They were asked to sit in a chair and entertain themselves with only their thoughts for 6-15 minutes without falling asleep. Around half the participants rated the experience as negative, and a majority reported difficulty concentrating or their mind wandering.The next study repeated this design with 169 subjects asked to sit alone at home without any distractions and just think. The undergraduates found the experience about as thrilling at home as they did in the lab, the only major difference being that now around a third of the participants reported “cheating” by doing things like going online or listening to music. Similar results were obtained in a community sample of about 60 people, a full half of which reported cheating during the period.

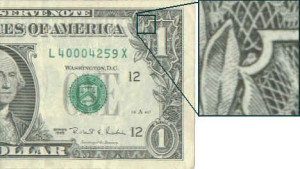

Finally, we reach the part of the study that made the headlines. Fifty-five undergrads were again brought into the lab. Their task began by rating the pleasantness of various stimuli, one of which being a mild electric shock (designed to be unpleasant, but not too painful). After doing this, they were given the sit-alone-and-think task, but were told they could, if they wanted, shock themselves again during their thinking period via an ankle bracelet they were wearing. Despite participants knowing that the shock was unpleasant and that shocking themselves was entirely optional, around 70% of men and 25% of women opted to deliver at least one shock to themselves during the thinking period when prompted with the option. Even among the subjects who said they would pay $5 instead of being shocked again, 64% of the men and 15% of the women shocked themselves anyway. From this, Wilson et al (2014) concluded that thinking was so aversive that people would rather shock themselves than think if given the option, even if they didn’t like the shock.

“The increased risk of death sure beats thinking!”

The authors of the paper posited two reasons as to why people might dislike doing nothing but sitting around thinking, neither of which make much sense to me: their first explanation was that people might ruminate more about their own shortcomings when they don’t have anything else to do. Why people’s minds would be designed to do such a thing is a bit beyond me and, in any case, the results didn’t find that people defaulted to thinking they were failures. The second explanation was that people might find it unpleasant to be alone with their own thoughts because they had to be a “script writer” and an “experiencer” of them. Why that would be unpleasant is also a bit beyond me and, again, that wasn’t the case either: participants did not find having someone else prompting the focus of thoughts anymore pleasant.

Missing from this paper, like many papers in psychology, is an evolutionary-level, functional consideration of what’s going on here: not explored or mentioned is the idea that thinking itself doesn’t really do anything. By that, I mean evolution, as a process, cannot “see” (i.e. select for) what organisms think or feel directly. The only thing evolution can “see” is what an organism does; how it behaves. That is to say the following: if you had one organism that had a series of incredibly pleasant thoughts but never did anything because of them, and another that never had any thoughts whatsoever but actually behaved in reproductively-useful ways, the latter would win the evolutionary race every single time.

To further drive this point home, imagine for a moment an individual member of a species which could simply think itself into happiness; blissful happiness, in fact. What would be the likely result for the genes of that individual? In all probability, they would fair less well than their counterparts who were not so inclined since, as we just reviewed, feeling good per se does not do anything reproductively useful. If those positive feelings derived from just thinking happy thoughts motivated any kind of behavior (which they frequently do) and those feelings were not designed to be tied to some useful fitness outcomes (which they wouldn’t be, in this case), it is likely that the person thinking himself to bliss would end up doing fewer useful things along with many maladaptive ones. The logic here is that there many things they could do, but only a small subsection of those things are actually worth doing. So, if organisms selected what to do on the basis of their emotions, but these emotions were being generated for reasons unrelated to what they were doing, they would select poor behavioral options more than not. Importantly, we could make a similar argument for an individual that thought himself into despair frequently: to the extent that feeling motivate behaviors, and to the extent that those feelings are divorced from their fitness outcomes, we should expect bad fitness results.

Accordingly, we ought to also expect that thinking per se is not what people find aversive in this experiment. There’s no reason for the part of the brain doing the thinking (rather loosely conceived) here to be hooked up to the pleasure or pain centers of the brain. Rather, what the subjects likely found aversive here was the fact that they weren’t doing anything even potentially useful or fun. The participants in these studies were asked to do more than just think about something; the participants were also asked to forego doing other activities, like browsing the internet, reading, exercising, or really anything at all. So not only were the subjects asked to sit around and do absolutely nothing, they were also asked not do the other fun, useful things they might have otherwise spent their time on.

“We can’t figure out why he doesn’t like being in jail despite all that thinking time…”

Now, sure, it might seem a bit weird that people would shock themselves instead of just sit there and think at first glance. However, I think that strangeness can be largely evaporated by considering two factors: first, there are probably some pretty serious demand characteristics at work here. When people know they’re in a psychology experiment and you only prompt them to do one thing (“but don’t worry it’s totally optional. We’ll just be in the other room watching you…”), many of them might do it because they think that’s what the point of the experiment is (which, I might add, they would be completely correct about in this instance). There did not appear to be any control group to see how often people independently shocked themselves when not prompted to do so, or when it wasn’t their only option. I suspect few people would under that circumstance.

The second thing to consider is that most organisms would likely start behaving very strangely after a time if you locked them in an empty room; not just humans. This is because, I would imagine, the minds of organisms are not designed to function in environments where there is nothing to do. Our brains have evolved to solve a variety of environmentally-recurrent problems and, in this case, there seems to be no way to solve the problem of what to do with one’s time. The cognitive algorithms in their mind would be running through a series of “if-then” statements and not finding a suitable “then”. The result is that their mind could potentially start generating relatively-random outputs. In a strange situation, the mind defaults to strange behaviors. To make the point simply, computers stop working well if you’re using them in the shower, but, then again, they were never meant to go in the shower in the first place.

To return to Louis CK, I don’t think I get bored because I’m not thinking about anything, nor do I think that thinking about things is what people found aversive here. After all, we are all thinking about things – many things – constantly. Even when we are “distracted”, that doesn’t mean we are thinking about nothing; just that our attention is on something we might prefer it wasn’t. If thinking it what was aversive here, we should be feeling horrible pretty much all the time, which we don’t. Then again, maybe animals in captivity really do start behaving weird because they don’t want to be the “script writer” and “experiencer” of their own thoughts…

References: Wilson, T., Reinhard, D., Westgate, E., Gilbert, D., Ellerbeck, N., Hahn, C., Brown, C., & Shaked, A. (2014). Just think: The challenges of the disengaged mind. Science, 345, 75-77.