I happen to have an iPhone because, as many of you know, I think differently (not to be confused with the oddly-phrased “Think Different”® slogan of the parent company, Apple), and nothing expresses those characteristics of intelligence and individuality about me better than my ownership of one of the most popular phones on the market. While the iPhone itself is a rather functional piece of technology, there is something about it (OK; related to it) that has consistently bothered me: the Facebook app I can download for it. The reason this app has been bugging me is that, at least as far as my recent memory allows, the app seems to have an unhealthy obsession with showing me the always-useless “top stories” news feed as my default, rather than the “most recent” feed I actually want to see. In fact, I recall that the last update to the app actually made it more of a hassle to get to the most recent feed, rather than make it easily accessible. I had always wondered why there didn’t seem to be a simple way to change my default, as this seems like a fairly basic design fix. Not to get too conspiratorial about the whole thing, but this past week, I think I might have found part of the answer.

Which brings us to the matter of the Illuminati…

It’s my suspicion that the “top stories” feed has uses beyond simply trying to figure out which content I might want to see; this would be a good thing, because if the function were to figure out what I want to see, it’s pretty bad at that task. The “top stories” feed might also be used for the sinister purposes of conducting research (then again, the “most recent” feed can probably do that as well; I just really enjoy complaining about the “top stories” one). Since this new story (or is it a “top story”?) about Facebook conducting research with its users has been making the rounds in the media lately, I figured I would add my two cents to the incredibly tall stack of pennies the internet has collectively made in honor of the matter. Don’t get it twisted, though: I’m certainly not doing this in the interest of click-bait to capitalize on a flavor-of-the-week topic. If I were, I would have titled this post “These three things about the Facebook study will blow your mind; the fourth will make you cry” and put it up on Buzzfeed. Such behavior is beneath me because, as I said initially, I think different(ly)…

Anyway, onto the paper itself. Kramer et al (2014) set out to study whether manipulating what kind of emotional content people are exposed to in other’s Facebook status updates had an effect on that person’s later emotional content in their own status updates. The authors believe such an effect would obtain owing to “emotional contagion”, which is the idea that people can “…transfer positive and negative moods and emotions to others”. As an initial semantic note, I think that such phrasing – the use of contagion as a metaphor – only serves to lead one to think incorrectly about what’s going on here. Emotions and moods are not the kind of things that can be contagious the way pathogens are: pathogens can be physically transferred from one host to another, while moods and emotions cannot. Instead, moods and emotions are things generated by our minds from particular sets of inputs.

To understand that distinction quickly, consider two examples: in the first case, you and I are friends. You are sad and I see you being sad. This, in turn, makes me feel sad. Have your emotions “infected” me? Probably not; consider what would happen if you and I were enemies instead: since I’m a bastard and I like to see people I don’t like fail, your sadness might make me feel happy instead. So it doesn’t seem to be your emotion per se that’s contagious; it might just be the case that I happen to generate similar emotions under certain circumstances. While this might seem to be a relatively minor issue, similar types of thinking about the topic of ideas – specifically, that ideas themselves can be contagious – has led to a lot of rather unproductive thinking and discussions about “memes”. By talking about ideas or moods independently of the minds that create them, we end up with a rather dim view of how our psychology works, I feel.

Which is just what the Illuminati want…

Moving past that issue, however, the study itself is rather simple: for a week in 2012, approximately 700,000 Facebook users had some of their news feed content hidden from them some of the time. Each time one of the subjects viewed their feed, depending on what condition they were in, each post containing certain negative or positive words had a certain probability (between 10-90% chance) of being omitted. Unfortunately, the way the paper is written, it’s a bit difficult to get a sense as to precisely how much content was, on average, omitted. However, as the authors note, this was done a per-viewing basis, so content that was hidden during one viewing might well have showed up were the page to be refreshed (and sitting there refreshing Facebook minute after minute is something many people might actually do). The content was also only hidden on the news feed: if the subject visited a friend’s page directly or sent or received any messages, all the content was available. So, for a week, some of the time, some of the content was omitted, but only on a per-view basis, and only in one particular form (the news feed); not exactly the strongest manipulation I could think of.

The effect of that manipulation was seen when examining what percentage of positive or negative words the subjects themselves used when posting their status updates during the experimental period. Those subjects who saw more positive words in their feed tended to post more positive words themselves, and vice versa for negative words. Sort of, anyway. OK; just barely. In the condition where subjects had access to fewer negative words, the average subject’s status was made up of about 5.3% positive words and 1.7% negative words; when the subjects had access to fewer positive words, these percentages plummeted/jumped to…5.15% and 1.75%, respectively. Compared to the control groups, then, these changes amount to increases or decreases of in between 0.02 and 0.0001 standard deviations of emotional word usage or, as we might say in precise statistical terms, effects so astonishingly small they might as well not be said to exist.

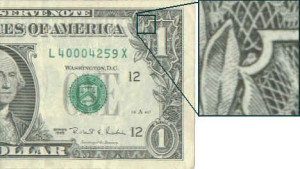

“Can’t you see it? The effect is right there; plain as day!”

What we have here, in sum, then, is an exceedingly weak and probabilistic manipulation that had about as close to no net effect as one could reasonably get, based on an at-least-partially (if only metaphorically) deficient view of how the mind works. The discussion about the ethical issues people perceived with the research appears to have vastly overshadowed the fact that research itself wasn’t really very strong or interesting. So for all of you people outraged over this study for fear that people were harmed: don’t worry. I would say the evidence is good that no appreciable harm came of it.

I would also say that other ethical criticisms of the study are a bit lacking. I’ve seen people raise concerns that Facebook had no business seeing if bad moods would be induced by showing people a disproportionate number of negative status updates; I’ve also seen concerns that the people posting these negative updates might not have received the support they needed if other people were blocked from seeing them. The first thing to note is that Facebook did not increase the absolute number of positive or negative posts; only (kind of) hid some of them from appearing (some of the time, to some people, in one particular forum); the second is that, given those two criticisms, it would seem that Facebook is in a no-win situation: reducing or failing to reduce the number of negative stories leads to them being criticized. Facebook is either failing to get people the help they need or bumming us out by disproportionately exposing us to people who need help. Finally, I would add that if anyone did miss a major life event of a friend – positive or negative – because Facebook might have probabilistically omitted a status update on a given visit, then you’re likely not very good friends with that person anyway, and probably don’t have a close enough relationship with them that would allow you to realistically lend help or take much pleasure from the incident.

References: Kramer, A., Guillory, J., & Hancock, J. (2014). Experimental evidence of massive-scale emotional contagion through social networks. Proceedings of the National Academy of Sciences of the United States of America, doi: 10.1073/pnas.1320040111

Nothing to add except that I want to emphasis that I too “think different(ly)” and am glad someone succinctly summed up while this study is largely a non-issue. The fact that they were allowed to get away with the anchoring on their graphs y-axis is also quite remarkable.

The visual anchoring issue is pretty common in most psychology research, from what I’ve seen. Nothing makes a 0.1 difference on a ten-point scale pop like zooming way, way in.

I’ve seen it definitely but it’s not so common in articles on PNAS and it is rarely as egregious as this example.