And if you think I stole that title from a popular song, you’re very wrong

Hawaii recently introduced some bills aimed at prohibiting the sale of games with for-purchase loot boxes to anyone under 21. For those not already in the know concerning the world of gaming, loot boxes are effectively semi-random grab bags of items within video games. These loot boxes are usually received by players either as a reward for achieving something within a game (such as leveling up) and/or can be purchased with currency, be that in-game currency or real world money. Specifically, then, the bills in question are aimed at games that sell loot boxes for real money, attempting to keep them out of the hands of people under 21.

Just like tobacco companies aren’t permitted to advertise to minors out of fear that children will come to find smoking an interesting prospect, the fear here is that children who play games with loot boxes might develop a taste for gambling they otherwise wouldn’t have. At least that’s the most common explicit reason for this proposal. The gaming community seems to be somewhat torn about the issue: some gamers welcome the idea of government regulation of loot boxes while others are skeptical of government involvement in games. In the interest of full disclosure for potential bias – as a long-time gamer and professional loner – I consider myself to be a part of the latter camp.

My hope today is to explore this debate in greater detail. There are lots of questions I’m going to discuss, including (a) whether loot boxes are gambling, (b) why gamers might oppose this legislation, (c) why gamers might support it, (d) what other concerns might be driving the acceptance of regulation within this domain, and (e) talk about whether this kind of random mechanics actually make for better games.

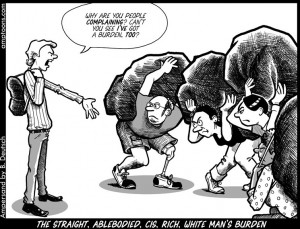

Lets begin our investigation in gaming’s seedy underbelly

To set the stage, a loot box is just what it sounds like: a package randomized of in-game items (loot) which are earned by playing the game or purchased. In my opinion, loot boxes are gambling-adjacent types of things, but not bone-fide gambling. The prototypical example of gambling is along the lines of a slot machine. You put money into it and have no idea what you’re going to get out. You could get nothing (most of the time), a small prize (a few of the times), or a large prize (almost never). Loot boxes share some of those features – the paying money for randomized outcomes – but they don’t share others: first, with loot boxes there isn’t a “winning” and “losing” outcome in the same way there is with a slot machine. If you purchase a loot box, you should have some general sense as to what you’re buying; say, 5 items with varying rarities. It’s not like you sometimes open a loot box and there are no items, other times there are 5, and other times there are 20 (though more on that in a moment). The number of items you receive is usually set even if the contents are random. More to the point, the items you “receive” you often don’t even own; not in the true sense. If the game servers get shut down or you violate terms of service, for instance, your account with the items get deleted and they disappear from existence and you don’t get to sue someone for stealing from you. There is also no formal cashing out of many of these games. In that sense, there is less of a gamble in loot boxes than what we traditionally consider gambling.

Importantly, the value of these items is debatable. Usually players really want to open some items and don’t care about others. In that sense, it’s quite possible to open a loot box and get nothing of value, as far as you’re concerned, while hitting jackpots in others. However, if that valuation is almost entirely subjective in nature, then it’s hard to say that not getting what you want is losing while getting what you do is winning, as that’s going to vary from person to person. What you are buying with loot boxes isn’t a chance at a specific item you want; it is a set number of random items from a pool of options. To put that into an incomplete but simple example, if you put money into a gumball machine and get a gumball, that’s not really a gamble and you didn’t really lose. It doesn’t become gambling, nor do you lose, if the gumballs are different colors/flavors and you wanted a blue one but got a green one.

One potential exception to the argument of equal value to this is when the items opened aren’t bound to the opener; that is, they can be traded or sold to other players. You don’t like your gumball flavor? Well, now you can trade your friend your gumball for theirs, or even buy their gumball from them. When this possibility exists, secondary markets pop up for the digital items where some can be sold for lots of real money while others are effectively worthless. Now, as far as the developers are concerned, all the items can have the same value, which makes it look less like gambling; it’s the secondary market that makes it look more like gambling, but the game developers aren’t in control of that.

Kind of like these old things

An almost-perfect metaphor for this can be found in the sale of Baseball cards (which I bought when I was younger, though I don’t remember what the appeal was): packs containing a set number of cards – let’s say 10 – are purchased for a set price – say $5 – but the contents of those packs is randomized. The value of any single card, from the perspective of the company making them, is 1/10 the cost of the pack. However, some people value specific cards more than others; a rookie card of a great player is more desired than the card for a veteran who never achieved anything. In such cases, a secondary market crops up among those who collect the cards, and those collectors are willing to pay a premium for the desired items. One card might sell for $50 (worth 10-times the price of a pack), while another might be unable to find a buyer at all, effectively worth $0.

This analogy, of course, raises other questions about the potential legality of existing physical items, like sports cards, or those belonging to any trading card game (like Magic: The Gathering, Pokemon, or Yugioh). If digital loot boxes are considered a form of gambling and might have effects worth protecting children from, then their physical counterparts likely pose the same risks. If anything, the physical versions look more like gambling because at least some digital items cannot be traded or sold between players, while all physical items pose that risk of developing real value on a secondary market. Imagine putting money into a slot machine, hitting the jackpot, and then getting nothing out of it. That’s what many virtual items amount to.

Banning the sale of loot boxes in gaming from people under the age of 21 likely also entails the banning of card packs from them as well. While the words “slippery slope” are usually used together with the word “fallacy,” there does seem to be a very legitimate slope here worth appreciating. The parallels between loot boxes and physical packs of cards are almost perfect (and, where they differ, card packs look more like gambling; not less). Strangely, I’ve seen very few voices in the gaming community suggesting that the sale of packs of cards should be banned from minors; some do (mostly for consistency sake; they don’t raise the issue independently of the digital loot box issue almost ever as far as I’ve seen), but most don’t seem concerned with the matter. The bill being introduced in Hawaii doesn’t seem to mention baseball or trading cards anywhere either (unless I missed it), which would be a strange omission. I’ll return to this point later when we get to talking about the motives behind the approval of government regulation in the digital realm coming from gamers.

The first step towards addiction to that sweet cardboard crack

But, while we’re on the topic of slippery slopes, let’s also consider another popular game mechanic that might also be worth examination: randomized item drops from in-game enemies. These aren’t items you purchase with money (at least not in game), but rather ones you purchase with time and effort. Let’s consider one of the more well-known games to use this: WoW (World of Warcraft). In WoW, when you kill enemies with your character, you may receive valued items from their corpse as you loot the bodies. The items are not found in a uniform fashion: some are very common and other quite rare. I’ve watched a streamer kill the same boss dozens of times over the course of several weeks hoping to finally get a particular item to drop. There are many moments of disappointment and discouragement, complete with feelings of wasted time, after many attempts are met with no reward. But when the item finally does drop? There is a moment of elation and celebration, complete with a chatroom full of cheering viewers. If you could only see the emotional reaction of the people to getting their reward and not their surroundings, my guess is that you’d have a hard time differentiating a gamer getting a rare drop they wanted from someone opening the desired item out of a loot box for which they paid money.

What I’m not saying is that I feel random loot drops in World of Warcraft are gambling; what I am saying is that if one is concerned about the effects loot boxes might have on people when it comes to gambling, they share enough in common with randomized loot drops that the latter are worth examining seriously as well. Perhaps it is the case that the item a player is after has a fundamentally different psychological effect on them if chances at obtaining it are purchased with real money, in-game currency, or play time. Then again, perhaps there is no meaningful difference; it’s not hard to find stories of gamers who spent more time than is reasonable trying to obtain rare in-game items to the point that it could easily be labeled an addiction. Whether buying items with money or time have different effects is a matter that would need to be settled empirically. But what if they were fundamentally similar in terms of their effects on the players? If you’re going to ban loot boxes sold with cash under the fear of the impact they have on children’s propensity to gamble or develop a problem, you might also end up with a good justification for banning randomized loot drops in games like World of Warcraft as well, since both resemble pulling the lever of a slot machine in enough meaningful ways.

Despite that, I’ve seen very few people in the pro-regulation camp raise the concern about the effects that World of Warcraft loot tables are having on children. Maybe it’s because they haven’t thought about it yet, but that seems doubtful, as the matter has been brought up and hasn’t been met with any concern. Maybe it’s because they view the costs of paying real money for items as more damaging than paying with time. Either way, it seems that even after thinking about it, those who favor regulation of loot boxes largely don’t seem to care as much about card games, and even less about randomized loot tables. This suggests there are other variables beyond the presence of gambling-like mechanics underlying their views.

“Alright; children can buy some lottery tickets, but only the cheap ones”

But let’s talk a little more about the fear of harming children in general. Not that long ago there were examination of other aspects of video games: specifically, the component of violence often found and depicted within them. Indeed, research into the topic is still a thing today. The fear sounded like a plausible one to many: if violence is depicted within these games – especially within the context of achieving something positive, like winning by killing the opposing team’s characters – those who play the games might become desensitized to violence or come to think it acceptable. In turn, they would behave more violently themselves and be less interested in alleviating violence directed against others. This fear was especially pronounced when it came to children who were still developing psychologically and potentially more influenced by the depictions of violence.

Now, as it turns out, those fears appear to be largely unfounded. Violence has not been increasing as younger children have been playing increasingly violent video games more frequently. The apparent risk factor for increasing aggressive behavior (at least temporarily; not chronically) was losing at the game or finding it frustrating to play (such as when the controls feel difficult to use). The violent content per se didn’t seem to be doing much causing when it came later violence. While players who are more habitually aggressive might prefer somewhat different games than those who are not, that doesn’t mean the games are causing them to be violent.

This gives us something of a precedent for worrying about the face-validity of the claims that loot boxes are liable to make gambling seem more appealing on a long-term scale. It is possible that the concern over loot boxes represents more of a moral panic on the part of the legislatures, rather than a real issue having a harmful impact. Children who are OK with ripping an opponent’s head off in a video game are unlikely to be OK with killing someone for real, and violence in video games doesn’t seem to make the killing seem more appealing. It might similarly be the case that opening loot boxes makes people no more likely to want to gamble in other domains. Again, this is an empirical matter that requires good evidence to prove the connection (and I emphasize the word good because there exists plenty of low-quality evidence that has been used to support the connection between violence in video games causing it in real life).

Video games inspire cosplay; not violence

If it’s not clear at this point, I believe the reasons that some portion of the gaming community supports this type of regulation has little to nothing to do with their concerns about children gambling. For the most part, children do not have access to credit cards and so cannot themselves buy lots of loot boxes, nor do they have access to lots of cash they can funnel into online gift cards. As such, I suspect that very few children do serious harm to themselves or their financial future when it comes to buying loot boxes. The ostensible concern for children is more of a plausible-sounding justification than one actually doing most of the metaphorical cart-pulling. Instead, I believe the concern over loot boxes (at least among gamers) is driven by two more mundane concerns.

The first of these is simply the perceived cost of a “full” game. There has long been a growing discontent in the gaming community over DLC (downloadable content), where new pieces of content are added to a game after release for a fee. While that might seem like the simple purchase of an expansion pack (which is not a big deal), the discontent arises were a developer is perceived to have made a “full” game already, but then cut sections out of it purposefully to sell later as “additional” content. To place that into an example, you could have a fighting game that was released with 8 characters. However, the game became wildly popular, resulting in the developers later putting together 4 new characters and selling them because demand was that high. Alternatively, you could have a developer that created 12 characters up front, but only made 8 available in the game to begin with, knowingly saving the other 4 to sell later when they could have just as easily been released in the original. In that case, intent matters.

Loot boxes do something similar psychologically at times. When people go to the store and pay $60 for a game, then take it home to find out the game wants them to pay $10 or more (sometimes a lot more) to unlock parts of the game that already exist on the disk, that feels very dishonest. You thought you were purchasing a full game, but you didn’t exactly get it. What you got was more of an incomplete version. As games become increasingly likely to use these loot boxes (as they seem to be profitable), the true cost of games (having access to all the content) will go up.

Just kidding! It’s actually 20-times more expensive

Here is where the distinction between cosmetic and functional (pay-to-win) loot boxes arises. For those not in the know about this, the loot boxes that games sell vary in terms of their content. In some games, these items are nothing more than additional colorful outfits for your characters that have no effect on game play. In others, you can buy items that actually increase your odds of winning a game (items that make your character do more damage or automatically improve their aim). Many people who dislike loot boxes seem to be more OK (or even perfectly happy) with them so long as the items are only cosmetic. So long as they can win the game as effectively spending $0 as they could spending $1000, they feel that they own the full version. When it feels like the game you bought gives an advantage to players who spent more money on it, it again feels like the copy of the game you bought isn’t the same version as theirs; that it’s not as complete an experience.

Another distinction arises here in that I’ve noticed gamers seem more OK with loot boxes in games that are Free-to-Play. These are games that cost nothing to download, but much of their content is locked up-front. To unlock content, you usually invest time or money. In such cases, the feeling of being lied to about the cost of the game don’t really exist. Even if such free games are ultimately more expensive than traditional ones if you want to unlock everything (often much more expensive if you want to do so quickly), the actual cost of the game was $0. You were not lied to about that much and anything else you spent afterwards was completely voluntary. Here the loot boxes look more like a part of the game than an add-on to it. Now this isn’t to say that some people don’t dislike loot boxes even in free-to-play games; just that they mind them less.

“Comparatively, it’s not that bad”

The second, related concern, then, is that developers might be making design decisions that ultimately make games worse to try and sell more loot boxes. To put that in perspective, there are some cases of win/win scenarios, like when a developer tries to sell loot boxes by making a game that’s so good people enjoy spending money on additional content to show off how much they like it. Effectively, people are OK with paying for quality. Here, the developer gets more money and the players get a great game. But what happens when there is a conflict? A decision needs to be made that will either (a) make the game play experience better but sell fewer loot boxes, or (b) make the game play experience worse, but sell more loot boxes? However frequently these decisions needs to be made, they assuredly are made at some points.

To use a recent example, many of the rare items in the game Destiny 2 were found within an in-game store called Eververse. Rather than unlocking rare items through months of completing game content over and over again (like in Destiny 1), many of these rare, cosmetic items were found only within Eververse. You could unlock them with time, in theory, but only at very slow rates (which were found to actually be intentionally slowed down by the developers if a player put too much time into the game). In practice, the only way to unlock these rare items was through spending money. So, rather than put interesting and desirable content into the game as a reward for being good at it or committed to it, it was largely walled off behind a store. This was a major problem for people’s motivation to continue playing the game, but it traded off against people’s willingness to spend money on the game. These conflicts created a worse experience for a great many players. It also yielded the term “spend-game content” to replace “end-game content.” More loot boxes in games potentially means more decisions like that will be made where reasons to play the game are replaced with reasons to spend money.

Another such system was discussed in regards to a potential patent by Electronic Arts (EA), though as far as I’m aware it has not made its way into a real game yet. This system revolved around online, multiplayer games with items available for purchase. The system would be designed such that players who spent money on some particular item would be intentionally matched against players of lower skill. As the lower-skill players would be easier for the buyer to beat with their new items, it would make the purchaser feel like their decision to buy was worth it. By contrast, the lower-level player might become impressed by how good the player with the purchased item performed and feel they would become better at the game if they too purchased it. While this might encourage players to buy in-game items, it would yield an ultimately less-competitive and interesting matchmaking system. While such systems are indeed bad for the game play experience, it is at least worth noting that such a system would work if the items were being sold came from loot boxes or were directly purchased.

“Buy the golden king now to get matched against total scrubs!”

If I’m right and the reasons gamers who favor regulation center around the cost and design direction of games, why not just say that instead of talking about children and gambling? Because, frankly, it’s not very persuasive. It’s too selfish of a concern to rally much social support. It would be silly for me to say, “I want to see loot boxes regulated out of games because I don’t want to spend money on them and think they make for worse gaming experiences for me.” People would just tell me to either not buy loot boxes or not buy games with loot boxes. Since both suggestions are reasonable and I can do them already, the need for regulation isn’t there.

Now if I decide to vote with my wallet and not buy games with loot boxes, that won’t have any impact on the industry. My personal impact is too small. So long as enough other people buy those games, they will continue to be produced and my enjoyment of the games will be decreased because of the aforementioned cost and design issues. What I need to do, then, is convince enough people to follow my lead and not buy these games either. It wouldn’t be until enough gamers aren’t buying the games that there would be incentives for developers to abandon that model. One reason to talk about children, then, is because you don’t trust that the market will swing in your favor. Rather than allow the market to decide feely, you can say that children are incapable of making good choices and are being actively harmed. This will rally more support to tip the scales of that market in your favor by forcing government intervention. If you don’t trust enough people will vote with their wallet like you do, make it illegal for younger gamers to be allowed to vote in any other way.

A real concern about children, then, might not be that they will come to view gambling as normal, but rather that they will come to view loot boxes (or other forms of added content, like dishonest DLC) in games as normal. They will accept that games often have loot boxes and they will not be deterred from buying titles that include them. That means more consumers now and in the future who are willing to tolerate or purchase loot boxes/DLC. That means fewer games without them which, in turn, means fewer options available to those voting with their wallets and not buying them. Children and gambling are brought up not because they are the gamer’s primary target of concern, but rather because they’re useful for a strategic end.

Of course, there are real issues when it comes to children and these microtransactions: they don’t tend to make great decisions, sometimes get access to the parent’s credit card information and then go on insane spending sprees in their games. This type of family fraud has been the subject of previous legal disputes, but it is important to note that this is not a loot box issue per se. Children will just as happily waste their parents money on known quantities of in-game resources as they would on loot boxes. It’s also something more a matter of parental responsibilities and creating purchasing verification than it is the heart of the matter at hand. Even if children do occasionally make lots of unauthorized purchases, I don’t think major game companies are counting on that as an intended source of vital revenue.

They start ballin’ out so young these days

For what it’s worth, I think loot boxes do run certain risks for the industry, as outlined above. They can make games costlier than they need to be and they can result in design decisions I find unpleasant. In many regards I’m not a fan of them. I just happen to think that (a) they aren’t gambling and (b) don’t require government intervention to remove because they are harming children, persuading them that gambling is fun and leading to more of it in the future. I think any kinds of microtransactions – whether random or not – can result in the same kinds of harms, addiction, and reckless spending. However, when it comes to human psychology, I think loot boxes are designed more a tool to fit our psychology than one that shapes it, not unlike how water takes the shape of the container it is in and not the other way around. As such, it is possible that some facets of loot boxes and other random item generation mechanics make players engage with the game in a way that yields more positive experiences, in addition to the costs they carry. If these gambling-like mechanics weren’t, in some sense, fun people would simply avoid games with them.

For instance, having content that one is aiming to unlock can provide a very important motivation to continue playing a game, which is a big deal if you want your game to last and be interesting for a long time. My most recent example of this is Destiny 2 again. Though I didn’t play the first Destiny, I have a friend who did that told me about it. In that game, items randomly dropped, and they dropped with random perks. This means you could get several versions of the same item, but have them all be different. It gave you a reason and a motivation to be excited about getting the same item for the 100th time. This wasn’t the case in Destiny 2. In that game, when you got a gun, you got the gun. There was no need to try and get another version of it because that didn’t exist. So what happened when Destiny 2 removed the random rolls from items? The motivation for hardcore players to keep playing long-term largely dropped off a cliff. At least that’s what happened to me. The moment I got the last piece of gear I was trying to achieve, a sense of, “why am I playing?” washed over me almost instantly and I shut the game off. I haven’t touched it since. The same thing happen to me in Overwatch when I unlocked the last skin I was interested in at the time. Had all that content be available from the start, the turning-off point likely would have come much sooner.

As another example, imagine a game like World of Warcraft, where a boss has a random chance to drop an amazing item. Say this chance is 1 in 500. Now imagine an alternative reality where this practice is banned because it’s deemed to be too much like gambling (not saying it will be; just imagine that it was). Now the item is obtained in the following way: whenever the boss is killed, it drops a token guaranteed. After you collect 500 of those tokens, you can hand them in and get the item as a reward. Do you think players would have a better time under that kind of gambling-like system, where each boss kill represents the metaphorical pull of a slot machine lever, or in the consistent condition? I don’t know the answer to that question offhand, but what I do know is that collecting 500 tokens sure sounds boring, and that’s coming from the person who values consistency, saving, and doesn’t enjoy traditional gambling. No one is going to make a compilation video of people reacting to finally collecting 500 items because all you’d have was another moment, just like the last 499 moments where the same thing happened. People would – and do – make compilation videos of streamers finally getting valuable or rare items, as such moments are more entertaining for views and players alike.