The relationship between myself and my cat has been described by many as a rather close one. After I leave my house for almost any amount of a time, I’m greeted by what appears to be a rather excited animal that will meow and purr excessively, all while rubbing on and rolling around my feet upon my return. In turn, I feel a great deal of affection towards my cat, and derive feelings of comfort and happiness from taking care of and petting her. Like the majority of Americans, I happen to be a pet owner, and these experiences and ones like them will all sound perfectly normal and relatable. I would argue, however, that they are, in fact, very strange feelings, biologically-speaking. Despite the occasional story of cross-species fostering, other animals do not seem to behave in ways that indicates they seek out anything resembling pet-ownership. It’s often not until the idea of other species not making habits of having pets is raised that one realizes how strange of a phenomenon pet ownership can be. Finding that bears, for instance, reliably took care of non-bears, providing them with food and protection, would be a biological mystery of the first-degree.

And that I get most of my work done like this seems normal to me.

So why people seem to be so fond of pets? My guess is that the psychological mechanisms that underlie pet ownership in humans are not designed for that function per se. I would say that for a few reasons, notable among them are the time and resource factors. First, psychological adaptations take a good deal of time to be shaped by selective forces, which means long periods of co-residence between animals and people would be required for any dedicated adaptations to have formed. Though it’s no more than a guess on my part, I would assume that conditions that made extended periods of co-residence more probable would likely not have arisen prior to the advent of agriculture and geographically-stable human populations. The second issue involves the cost/benefit ratios: pets require a good deal of investment, at least in terms of food. In order for there to have been any selective pressure to keep pets, the benefits provided by the pets would have needed to have more than offset the costs of their care, and I don’t know of any evidence in that regard. Dogs might have been able to pull their weight in terms of assisting in hunting and protection, but it’s uncertain; other pets – such as cats, birds, lizards, or even the occasional insect – probably did not. While certain pets (like cats) might well have been largely self-sufficient, they don’t seem to offer much in the way of direct benefits to their owners either. No benefits means no distinct selection, which means no dedicated adaptions.

Given that there are unlikely to be dedicated pet modules in our brain, what other systems are good candidates for explaining the tendency towards seeking out pets? The most promising one that comes to mind are our already-existing systems designed for the care of our own, highly-dependent offspring. Positing that pet-care is a byproduct of our infant-care would manage to skirt both the issues of time and resources; our minds were designed to endure such costs to deliver benefits to our children. It would also allow us to better understand certain facets of the ways people behave towards their pets, such as the “aww” reaction people often have to pets (especially young ones, like kittens and puppies) and babies, as well as the frequent use of motherese (baby-talk) when talking to pets and children (to compare speech directed at pets and babies see here and here. Note as well that you don’t often hear adults talking to each other in this manner). Of course, were you to ask people whether their pets are their biological offspring, many would give the correct response of “no”. These verbal responses, however, do not indicate that other modules of the brain – ones that aren’t doing the talking – “know” that pets aren’t actually your offspring, in much the same way that parts of the brain dedicated to arousal don’t “know” that generating arousal to pornography isn’t going to end up being adaptive.

There is another interesting bit of information concerning pet ownership that I feel can be explained through the pets-as-infants model, but to get to it we need to first consider some research on moral dilemmas by Topolski et al (2013). This dilemma is a favorite of mine, and the psychological community more generally: a variant of the trolley dilemma. In this study, 573 participants were asked to respond to a series of 12 similar moral dilemmas, all of which had the same basic setup: there is a speeding bus that is about to hit either a person or an animal that both wandered out into the street. The subject only has time to save one of them, and are asked which they would prefer to save. (Note: each subject responded to all 12 dilemmas, which might result in some carryover effects. A between subjects design would have been stronger here. Anyway…) The identity of the animal and person in the dilemma were varied across the conditions: the animal was either the subject’s pet (subjects were asked to imagine one if they didn’t currently have one) or someone else’s pet, and the person was either a foreign tourist, a hometown stranger, a distant cousin, a best friend, a sibling, or a grandparent.

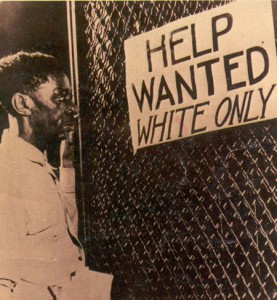

In terms of saving someone else’s pet, people generally didn’t seem terribly interested. From a high about of 12% of subjects choosing someone else’s pet over a foreign tourist to a low of approximately 2% of subjects picking the strange pet over their own sibling. The willingness to save the animal in question rose substantially when it was the subject’s own pet being considered, however: while people were still about as likely to save their own pet in cases involving a grandparent or sibling, approximately 40% of subjects indicated they would save their pet over a foreign tourist or a hometown stranger (for the curious, about 23% would save their pet over a distant cousin and only about 5% would save their pet over a close friend. For the very curious, I could see myself saving my pet over the strangers or distant cousin). The strength of the relationship between pet owners and their animals appears to be strong enough to, quite literally, make almost half of them throw another human stranger under the bus to save their pet’s lives.

This is a strange response to give, but not for the obvious reasons: given that our pets are being being treated as our children by certain parts of our brain, this raises the question as to why anyone, let alone a majority of people, would be willing to sacrifice the lives of their pets to save a stranger. I don’t expect, for instance, that many people would be willing to let their baby get hit by the bus to save a tourist, so why that discrepancy? Three potential reasons come to mind: first, the pets are only “fooling” certain psychological systems. While some parts of our psychology might be treating pets as children, other parts may well not be (children do not typically look like cats or dogs, for instance). The second possible reason involves the clear threat of moral condemnation. As we saw, people are substantially more interested in saving their own pets, relative to a stranger’s pet. By extension, it’s probably safe to assume that other, uninvolved parties wouldn’t be terribly sympathetic to your decision to save an animal over a person. So the costs to saving the pet might well be perceived as higher. Similarly, the potential benefits to saving an animal may typically be lower than those of another person, as saved individuals and their allies are more likely to do things like reciprocate help, relative to a non-human. Sure, the pet’s owner might reciprocate, but the pet itself would not.

The final potential reason that comes to mind concerns that interesting bit of information I alluded to earlier: women were more likely to indicate they would save the animal in all conditions, and often substantially so. Why might this be the case? The most probable answer to that question again returns to the pets-as-children model: whereas women have not had to face the risk of genetic uncertainty in their children, men have. This risk makes males generally less interested in investing in children and could, by extension, make them less willing to invest in pets over people. The classic phrase, “Momma’s babies; Daddy’s maybes” could apply to this situation, albeit in an under-appreciated way (in other words, men might be harboring doubts about whether the pet is actually ‘theirs’, so to speak). Without reference parental investment theory – which the study does not contain – explaining this sex difference in willingness to pick animals over people would be very tricky indeed. Perhaps it should come as no surprise, then, that the authors do not do a good job of explaining their findings, opting instead to redescribe them in a crude and altogether useless distinction between “hot” and “cold” types of cognitive processing.

“…and the third type of cognitive processing was just right”

In a very real sense, some parts of our brain treat our pets as children: they love them, care for them, invest in them, and wish to save them from harm. Understanding how such tendencies develop, and what cues our minds use to make distinctions between their offspring, the offspring of others, their pets, and non-pet animals are very interesting matters which are likely to be furthered by considering parental investment theory. Are people raised with pets from a young age more likely to view them as fictive offspring? How might hormonal changes during pregnancy affect women’s interest in pets? Might cues of a female mate’s infidelity make their male partner less interested in taking care of pets they jointly own? Under what conditions might pets be viewed as a deterrent or an asset to starting new romantic relationships, in the same way that children from a past relationship might? The answers to these questions require placing pet care in its proper context, and you’re going to have quite a hard time doing that without the right theory.

References: R. Topolski, J.N. Weaver, Z. Martin, & J. McCoy (2013). Choosing between the Emotional Dog and the Rational Pal: A Moral Dilemma with a Tail. ANTHROZOÖS, 26, 253-263 DOI: 10.2752/175303713X13636846944321