Recently, there’s been a new comic floating around my social news feeds claiming that it will forever change the way I think about something. It’s not like there’s ever isn’t such article on my feeds, really, but I decided it would provide me with the opportunity to examine some research I’ve wanted to write about for some time. In the case of this mind-blowing comic, the concept of privilege is explained through a short story. The concept itself is not a hard one to understand: privilege here refers to cases in which an individual goes through their life with certain advantages they did not earn. The comic in question looks at an economic privilege: two children are born, but one has parents with lots of money and social connections. As expected, the one with the privilege ends up doing fairly well for himself, as many burdens of life have been removed, while the one without ends up working a series of low-paying jobs, eventually in service to the privileged one. The privileged individual declares that nothing has ever been handed to him in life as he is literally being handed some food on a silver platter by the underprivileged individual, apparently oblivious to what his parent’s wealth and connections have brought him.

Stupid, rich baby…

In the interests of laying my cards on the table at the outset, I would count myself among those born into privilege. While my family is not rich or well-connected the way people typically think about those things, there haven’t been any necessities of life I have wanted for; I have even had access to many additional luxuries that others have not. Having those burdens removed is something I am quite grateful for, and it has allowed me to invest my time in ways other people could not. I have the hard-work and responsibility of my parents to thank for these advantages. These are not advantages I earned, but they are certainly not advantages which just fell from the sky; if my parents had made different choices, things likely would have worked out differently for me. I want to acknowledge my advantages without downplaying their efforts at all.

That last part raises a rather interesting question that pertains to the privilege debate, however. In the aforementioned comic, the implication seems to be – unless I’m misunderstanding it – that things likely would have turned out equally well for both children if they had been given access to the same advantages in their life. Some of the differences that each child starts with seems to be the results of their parent’s work, while other parts of that difference are the result of happenstance. The comic appears to suggest the differences in that case were just due to chance: both sets of parents love their children, but one set seems to have better jobs. Luck of the draw, I suppose. However, is that the case for life more generally; you know, the thing about which the comic intends to make a point?

For instance, if one set of parents happen to be more short-term oriented – interested in taking rewards now rather than foregoing them for possibly larger rewards in the future, i.e., not really savers – we could expect that their children will, to some extent, inherit those short-term psychological tendencies; they will also inherit a more meager amount of cash. Similarly, the child of the parents who are more long-term focused should inherit their proclivities as well, in addition to the benefits those psychologies eventually accrued.

Provided that happened to be the case, what would become of these two children if they both started life in the same position? Should we expect that they both end up at similar places? Putting the questions another way, let’s imagine that, all the sudden, the wealth of this world was evenly distributed among the population; no one had more or less than anyone else. In this imaginary world, how long would that state of relative equality last? I can’t say for certain, but my expectation is that it wouldn’t last very long at all. While the money might be equally distributed in the population, the psychological predispositions for spending, saving, earning, investing, and so on are unlikely to be. Over time, inequalities will again begin to assert themselves as those psychological differences – be they slight or large – accumulate from decision after decision.

Clearly, this isn an experiment that couldn’t be run in real life – people are quite attached to their money – but there are naturally occurring versions of it in everyday life. If you want to find a context in which people might randomly come into possession of a sum of money, look no further than the lottery. Winning the lottery, both whether one wins at all and how much money you get, are as close to randomly determined as we’re going to get. If the differences between the families in the mind-blowing comic are due to chance factors, we would predict that people who win more money in the lottery should, subsequently, be doing better in life, relative to those who won smaller amounts. By contrast, if chance factors are relatively unimportant, than the amount won should be less important: whether they win large or small amounts, they might spend it (or waste it) at similar rates.

Nothing quite like a dose of privilege to turn your life around

This was precisely what was examined by Hankins et al (2010): the authors sought to assess the relationship between the amount of money won in a lottery and the probability of the winner filing for bankruptcy within a five year period of their win. Rather than removing inequalities and seeing how things shake out, then, this research took the opposite approach: examining a process that generated inequalities and seeing how long it took for them to dissipate.

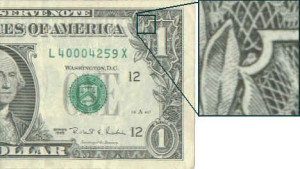

The primary sample for this research were the Fantasy 5 winners in Florida from April 1993 to November, 2002 who had won $600 or more: approximately 35,000 of them after certain screening measures had been implemented. These lottery winners were grouped into those who won between $10,000 and $50,000, and those who won between $50,000 and $150,000 (subsequent analyses would examine those who won $10,000 or less as well, leading to small, medium, and large winner groups).

Of those 35,000 winners, about 2,000 were linked to a bankruptcy filing within five years of their win, meaning that a little more than 1% of winners were filing each year on average; a rate comparable to the broader Florida population. The first step was to examine whether the large winners were doing comparable amounts of bankruptcy filing prior to their win, relative to the low winners which, thankfully, they were. In pretty much all respects, those who won a lot of money did not differ from those who won less before their win (including race, gender, marital status, educational attainment, and nine other demographic variables). That’s what one would expect from the lottery, after all.

Turning to what happened after their win, within the first two years, those who won larger sums of money were less likely to file for bankruptcy than smaller winners; however, in years 3 through 5 that pattern reversed itself, with larger winners becoming more likely to file. The end result of this shifting pattern was that, in five years time, large winners were equally likely to have filed for bankruptcy, relative to smaller winners. As Hankins et al (2010) put it, large cash payments did not prevent bankruptcy; they only postponed it. This result was consistently obtained after attempting a number of different analyses, suggesting that the finding is fairly robust. In fact, when the winners eventually did file for bankruptcy, the big winners didn’t have much more to show for it than small winners: those who won between $25,000 and $150,000 only had about $8,000 more in assets than those who had won less than $1,500, and the two groups had comparable debts.

Not much of an ROI on making it rain these days, it seems

At least when it came to one of the most severe forms of financial distress, large sums of cash did not appear to stop people from falling back into poverty in the long term, suggesting that there’s more going on in the world than just poor luck and unearned privilege. Whatever this money was being spent on, it did not appear to be sound investments. Maybe people were making more of their luck than they realized.

It should be noted that this natural experiment does pose certain confounds, perhaps the most important of which is that not everyone plays the lottery. In fact, given that the lottery itself is quite a bad investment, we are likely looking at a non-random sample of people who choose to play it in the first place; people who already aren’t prone to making wise, long-term decisions. Perhaps these results would look different if everyone played the lottery but, as it stands, thinking about these results in the context of the initial comic about privilege, I would have to say that my mind remains un-blown. Unsurprisingly, deep truths about social life can be difficult to sum up in a short comic.

References: Hankins, S., Hoekstra, M., & Skiba, P. (2010). The ticket to easy street? The financial consequences of winning the lottery. Vanderbilt Law and Economics Research Paper, 10-12.